Shaders

Getting Started

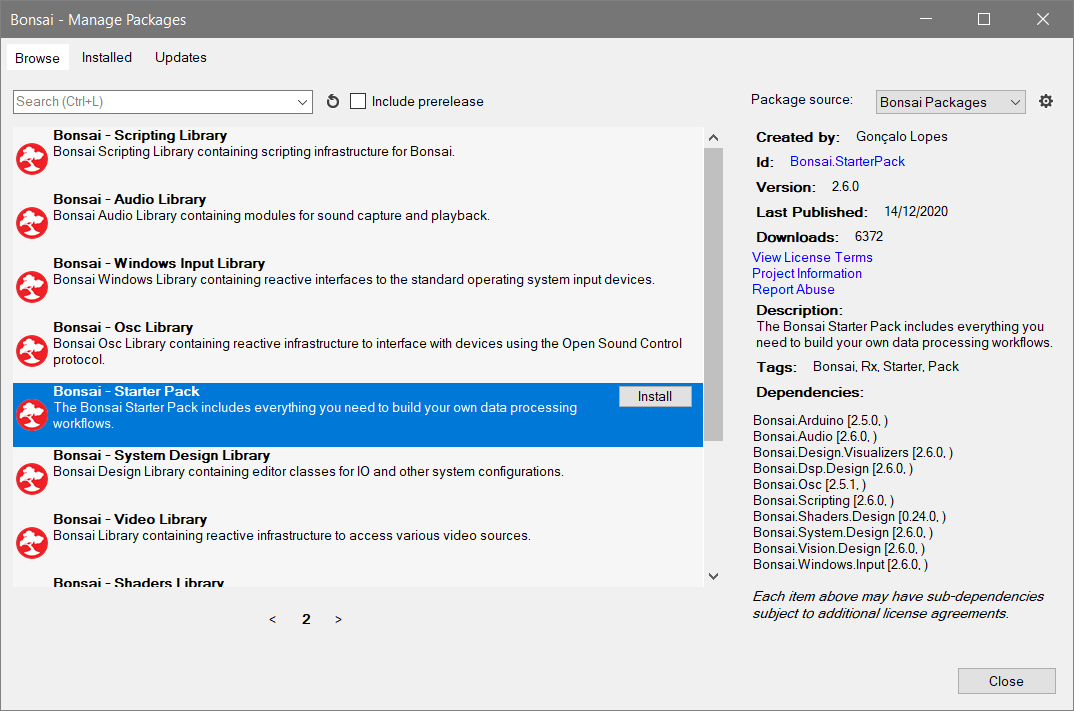

- Install BonVision from the package manager, make sure to select Community Packages for the package source dropdown.

- This should also install the Bonsai.Shaders package as a dependency, check it is installed in the installed tab.

Exercise 1: Creating a display window, loading resources

- Create a

CreateWindowsource. - Insert

BonVisionResources. - Insert

ShaderResources. - Insert

LoadResources. - Run the workflow to check it creates a display window.

- Try altering the properties of the

CreateWindowsource (e.g. ClearColor).

Exercise 2: Rendering a plane

- Create a

RenderFramesource. - Insert an

OrthographicView. - Create a subject for this branch with

PublishSubjectcalled ‘DrawCall’. - Set the boundaries of the

OrthographicViewto a -1:1 basis (bottom: -1, left: -1, top: 1, right: 1). - Subscribe to the

DrawCallsubject. - Insert a

CreateVector4. - Insert an

UpdateUniform. Set theShaderNameto ‘Color’ and theUniformNameto ‘color’. - Insert a

DrawMesh. Set theMeshNameto ‘Plane’ and theShaderNameto ‘Color’. - Run the workflow and check that the plane is being rendered in the display window.

- What happens when the

CreateVector4parameters are changed? - Look at the Color shader, can you explain its behaviour in response to changes in

CreateVector4?

Exercise 3: Creating a custom fragment shader

- Create a new shader file called

posColor.fragand add the following code:

#version 400

in vec2 texCoord;

out vec4 fragColor;

void main() {

fragColor = vec4(texCoord.x, texCoord.y, 0.0, 1.0);

}

- Edit

ShaderResources(double-click) and add a new Material.- Add ‘posColor’ in the

Namefield. - Select the

posColor.fragshader file created in the previous step as theFragmentShader. - We’ll borrow the vertex shader from BonVision for now, paste

BonVision:Shaders.Quad.vertin theVertexShaderfield. - Close the Shader configuration editor with OK.

- Add ‘posColor’ in the

- Remove

CreateVector4andUpdateUniformfrom the DrawCall branch. - Change

ShaderNameonDrawMeshtoposColor. - Run the workflow and observe the color profile of the rendered quad. Can you explain the color gradient pattern?

- Add a

Scaleafter theDrawCallsubscription and set X: 2, Y: 2. - Insert an

UpdateUniform, set theShaderNametoposColorand theUniformNameto transform. Which shader is this uniform targeting?

Exercise 4: Dynamically updating shader properties

- Update the

posColor.fragshader file to include a newuniform doubleproperty and use it as a parameter of the outputfragColor:

#version 400

uniform double dVal;

in vec2 texCoord;

out vec4 fragColor;

void main() {

fragColor = vec4(texCoord.x, texCoord.y, dVal, 1.0);

}

Note: a uniform is another type of shader variable declaration similar to in and out. A uniform variable has the distinction that it remains constant across invocation of all shader stages, while in and out may vary at different shader stages.

- In a new branch add a

MouseMovesource. - Insert

NormalizedDeviceCoordinatesto normalize mouse position relative to the display window size, and expose theXcoordinate output. - Insert

Rescaleto remap the X coordinate between 0 and 1 (Max: 1, Min: -1, RangeMax: 1, RangeMin: 0). - Insert an

UpdateUniformwithShaderNameasposColorandUniformNameasdVal

Exercise 5: Working with time

Aside from spatial information, we may also want our shader to be dynamic in time.

- Update the

posColor.fragshader to file to include a newuniform floatto represent time. - Change the

fragColoroutput to vary with time in one of the color channels:

#version 400

uniform double dVal;

uniform float time;

in vec2 texCoord;

out vec4 fragColor;

void main() {

fragColor = vec4(texCoord.x, sin(time), dVal, 1.0);

}

- Update the Bonsai workflow to

Accumulatethe elapsed time ofRenderFrame. What does this accumulation represent? - Convert the output to a float with

ExpressionTransform.Convert.ToSingle(it)

- Use the result to update the

timeproperty of the shader withUpdateUniform

You should now have a fragment shader that adjusts its color with:

- Spatial position of the pixel on screen

- Time since the start of display

- User input

- Optional: experiment with using other GLSL core functions to produce different shader behaviors based on this input data.

Exercise 6: Audio visualizer

- Create a new shader file

fractalPyramid.frag:

Note: This exercise uses a shader adapted from an existing ShaderToy example. You can optionally use a different shader from this site and adapt it yourself.

#version 400

in vec2 texCoord;

out vec4 fragColor;

uniform float iTime;

uniform vec2 offset = vec2(0.5, 0.5);

uniform float SCALE = 100;

uniform float mod = 1.0;

vec3 palette(float d){

return mix(vec3(0.2,0.7,0.9),vec3(1.,0.,1.),d);

}

vec2 rotate(vec2 p,float a){

float c = cos(a);

float s = sin(a);

return p*mat2(c,s,-s,c);

}

float map(vec3 p){

for( int i = 0; i<8; ++i){

float t = iTime*0.2;

p.xz =rotate(p.xz,t);

p.xy =rotate(p.xy,t*1.89);

p.xz = abs(p.xz);

p.xz-=.5;

}

return dot(sign(p),p)/5.;

}

vec4 rm (vec3 ro, vec3 rd){

float t = 0.;

vec3 col = vec3(0.);

float d;

for(float i =0.; i<64.; i++){

vec3 p = ro + rd*t;

d = map(p)*.5;

if(d<0.02){

break;

}

if(d>100.){

break;

}

//col+=vec3(0.6,0.8,0.8)/(400.*(d));

col+=palette(length(p)*.1)/(400.*(d));

t+=d;

}

return vec4(col,1./(d*100.));

}

void main() {

vec2 uv = (texCoord - offset) * SCALE * (1.0/mod);

vec3 ro = vec3(0.,0.,-50.);

ro.xz = rotate(ro.xz,iTime);

vec3 cf = normalize(-ro);

vec3 cs = normalize(cross(cf,vec3(0.,1.,0.)));

vec3 cu = normalize(cross(cf,cs));

vec3 uuv = ro+cf*3. + uv.x*cs + uv.y*cu;

vec3 rd = normalize(uuv-ro);

vec4 col = rm(ro,rd);

fragColor = col;

}

//Adapted from https://www.shadertoy.com/view/tsXBzS

- Set up a display window and resource loading as before.

- Create a new material in the

ShaderResources. UsefractalPyramid.fragas theFragmentShaderand use the same vertex shader as beforeBonVision:Shaders.Quad.vert. - Expose the

TimeStep.ElapsedTimeproperty fromRenderFrameand useAccumulateto accumulate the value. - Convert the data type of the

Accumulateoutput to a float with anExpressionTransformConvert.ToSingle(it)

- Use the output of

ExpressionTransformtoUpdateUniformof theiTimeuniform in thefractalPyramidshader.

- To get an audio feedback value we’ll construct a branch that acts as an audio amplitude filter.

- Create an

AudioCapturesource, then aPowoperator with the power set to 2. This will give us an absolute amplitude from the audio buffer. - Use

Averageto average the buffer amplitude to a single value, then expose theVal0output property. - Use another

Powto rescale the output (e.g. 0.5) and use aPublishSubjectcalled e.g.AudioLevel - In a different branch, subscribe to

DrawCallagain andScalethe plane as before. - Use

WithLatestFromto pair the outputs with the latestAudioLevelvalue, using anExpressionTransformto convert the type to a float. - Use the audio value output of

WithLatestFromto update themodproperty of thefractalPyramidshader. - The rendered plane should now react to both time and audio level acting as a simple audio visualizer.

Exercise 7: Using textures

Instead of setting pixel color programatically, we can sample pixel colors from a pre-defined texture.

- Create a new fragment shader called

textureShader.frag:

#version 400

uniform sampler2D tex;

in vec2 texCoord;

out vec4 fragColor;

void main() {

vec4 texel = texture(tex, texCoord);

fragColor = texel;

}

- We are using a new property type

sampler2Dto represent our input texture here, as well as another built-in GLSL functiontextureto retrieve texels (a.k.a. pixel colors) from our texture

- To render our texture we need to add another resource operator

TextureResourcesin the initialisation branch. Download a texture to use from the course GitHub. Double-clickTextureResourcesand add a newImageTextureand give it a Name (e.g.vertGrat). For the FileName property, use the dialog to select the downloaded texture. - Subscribe to

DrawCallandScalethe finalDrawMeshas before. - Add a

BindTextureoperator afterUpdateUniformand bind your named texture (set in theTextureNamefield) to thetextureShadershader. - You should now see the texture rendered in the display window when the workflow is run.

Exercise 8: Camera textures

We can also dynamically update the texture that is passed to the shader. We can use this to e.g. manipulate a camera feed.

- Add a new

Texture2Dtexture to theTextureResourcesoperator and name it something likeDynamicTexture. - Change the

BindTextureoperator to bind to this new texture instead of the image texture. - Use a

CameraCaptureandFlipoperator to produce a camera feed. - Connect this to an

UpdateTexturewithTextureNameset toDynamicTextureto update the texture on each new camera image. - You should now see the camera feed rendered in the display window.

Optional: Now that you have a camera image rendered via a texture and shader, you can take advantage of GPU acceleration to produce interesting image effects. Try and write a new fragment shader to manipulate the camera image, for example combining the image with a 2nd texture or applying a sobel filter for edge detection.